Introduction

This project aims to develop a visual navigation system for an autonomous vehicle for the auto valet parking task, i.e., an autonomous vehicle needs to find its way to an available parking space in the garage or parking lot, and finish the parking all by itself.

Platform

The visual navigation system is based on the Automatic Vehicle Monitoring system with four surround-view fisheye cameras on the vehicle. The computational core of the system is the Nvidia Drive PX2.

System Architecture

There are mainly eight sub-modules in the system. Each of them has its own tasks. They are enabled or disabled according to the state of the system.

The working status of the system can be divided into two stages: human driving and auto driving. The human driving stage usually occurs when the vehicle needs to do the mapping at a new parking lot, or to localize itself in a built map at the beginning of its autonomous parking task. After the re-localization succeeded, the navigation system can take control of the vehicle until the whole parking task is finished.

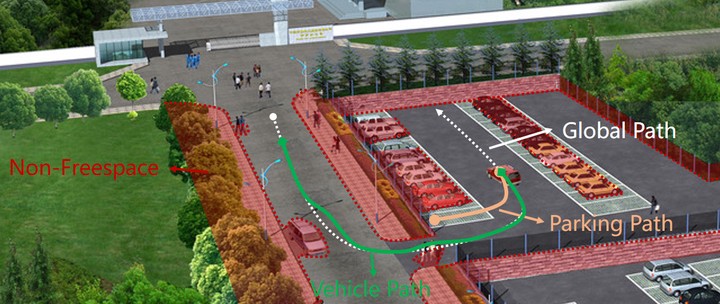

Typical Working Scenarios

- Parking lot

- Garage

Results

Videos are available on Youtube!

- Autonomous Navigation

- Free Parking Space Detection

- Surround-View based Visual Odometry

Indoor

Outdoor

- Hybrid Edge based Visual SLAM

IPM based edge filter

Semantic cloud based SLAM

Cartographer with edges

- Parking to a Virtual Space