Abstract

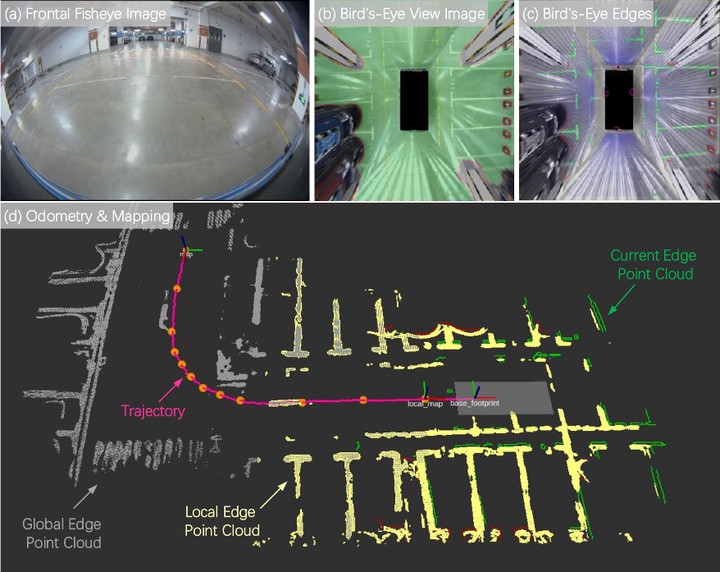

Vision-based localization and mapping solution is promising to be adopted in the automated valet parking task. In this paper, a semantic SLAM framework that leverages the hybrid edge information on bird’s-eye view images is presented. To extract useful edges from the synthesized bird’s-eye view image and the free-space contours for the SLAM task, different segmentation methods are designed to remove the noisy glare edges and distorted object edges caused by the inverse perspective mapping in view synthesis. Since only the free-space segmentation model needs training, our methods can dramatically reduce the labeling burden compared with previous road marking based methods. Those incorrect and incomplete edges are further cleaned and recovered by a temporal fusion of consecutive edges in a local map, respectively. Both a semantic edge point cloud map and an occupancy grid map can be built simultaneously in real time. Experiments in a parking garage demonstrate that the proposed framework can achieve higher accuracy and perform more robustly than previous point feature based methods.