Introduction

The goal of this project is to provide accurate and reliable results of the free space detection for autonomous driving. The free space detection is one of the most significant functionality of an autonomous vehicle. It is the basis of the autonomous navigation system. In this project, our target is to fuse all available and useful information from different sensors on the vehicle to generate the free space for autonomous driving.

Platform

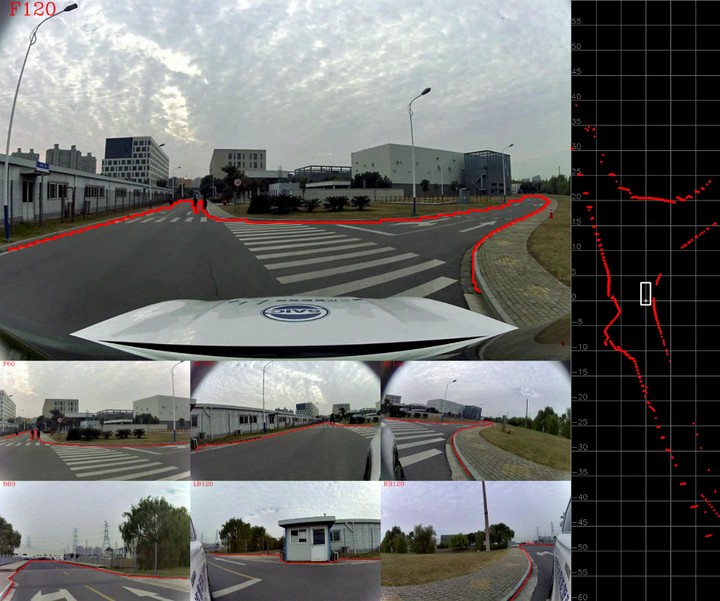

The vision system mounted on the vehicle consists of seven cameras, including two types of field of views (FoVs), i.e., 60° and 120° horizontal FoV. There are also three LiDAR sensors which can be integrated into the fusion system.

System Architecture

The technology roadmap includes three stages: spatial fusion, temporal fusion and multi-sensor fusion.

In the spatial fusion, the free space results from each camera are combined together by the inverse perspective mapping to enable a 360° free space perception.

In the temporal fusion, the temporal connection between consecutive frames are established and fused for more stable results.

In the multi-sensor fusion, different sources and modals of detection results are fused together to refine and enrich the free space detection results.

Results

Videos are available on Youtube!

- Camera Calibration Pipeline

- Free Space Detection

- Spatial Fusion by Inverse Perspective Mapping

- Spatial Fusion by Sharing Semantics